Connect your nodegoat environment to Wikidata, BnF, Transkribus, Zotero, and others

CORE AdminThe nodegoat Guides have been extended with a new section on 'Ingestion Processes'. An Ingestion Process allows you to query an external resource and ingest the returned data in your nodegoat environment. Once the data is stored in nodegoat, it can be used for tagging, referencing, filtering, analysis, and visualisation purposes.

You can ingest data in order to gather a set of people or places that you intend to use in your research process. You can also ingest data that enriches your own research data. Any collection of primary sources or secondary sources that have been published to the web can be ingested as well. This means that you can ingest transcription data from Transkribus, or your complete (or filtered) Zotero library.

The development of the Ingestion Process was part of the project 'Dynamic Data Ingestion (DDI)' (presented in this workshop series) and builds upon the Linked Data Resource feature (initially commissioned by the TIC-project in 2015 and extended in collaboration with ADVN in 2019).

Every nodegoat user is able to make use of these features. Next to the examples listed below, every endpoint that outputs JSON or XML can be queried. nodegoat data can be exported in CSV and ODT formats, or published via the nodegoat API as JSON and JSON-LD.

Wikidata

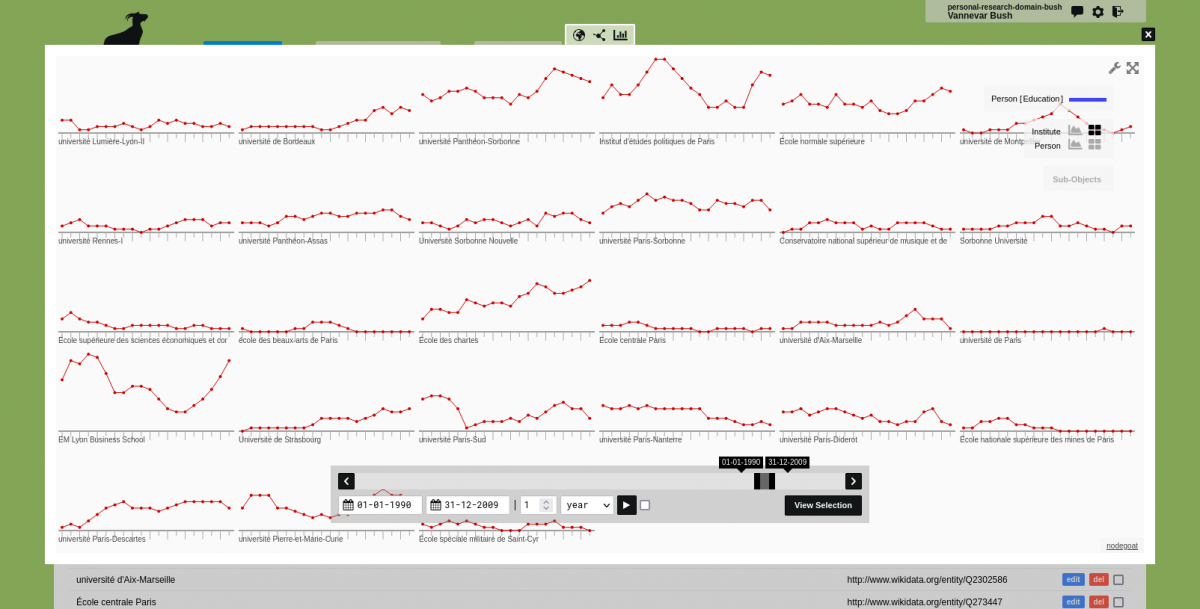

The first two guides deal with setting up a data model for places and people, and ingesting geographical and biographical data from Wikidata: 'Ingest Geographical Data', 'Ingest Biographical Data'. A number of SPARQL-queries are needed to gather the selected data. As writing these queries can be challenging, we have added two commented queries (here and here) that explain the rationale behind the queries.

These first two guides illustrate a common point in working with relational data (e.g. coming from graph databases, or relational databases): you need to first ingest the referenced Objects (in this case universities) before you can make references to these Objects (in this case people attending the universities).

The third guide covers the importance of external identifiers. External identifiers can be added manually, as described in the guide 'Add External Identifiers', or ingested from a resource like Wikidata, as described in the newly added guide 'Ingest External Identifiers'.[....]