nodegoat Workshop series organised by the SNSF SPARK project "Dynamic Data Ingestion"

CORE Admin

nodegoat has been extended with new features that allow you to ingest data from external resources. You can use this to enrich your dataset with contextual data from sources like Wikidata, or load in publications via a library API or SPARQL endpoint. This extension of nodegoat has been developed as part of the SNFS SPARK project 'Dynamic Data Ingestion (DDI): Server-side data harmonization in historical research. A centralized approach to networking and providing interoperable research data to answer specific scientific questions'. This project has been initiated and led by Kaspar Gubler of the University of Bern.

Because this feature is developed in nodegoat, it can be used by any nodegoat user. And because the Ingestion processes can be fully customised, they can be used to query any endpoint that publishes JSON data. This new feature allows you to use nodegoat as a graphical user interface to query, explore, and store Linked Open Data (LOD) from your own environment.

These newly developed functionalities built upon the Linked Data Resource feature that was added to nodegoat in 2015. This initial development was commissioned by the TIC-project at the Ghent University and Maastricht University. This feature was further extended in 2019 during a project of the ADVN.

Workshop Series

We will organise a series of four virtual workshops to share the results of the project and explore nodegoat's data ingestion capabilities. These workshops will take place on 28-04-2021, 05-05-2021, 12-05-2021, and 26-05-2021. All sessions take place between 14:00 and 17:00 CEST. The workshops will take place using Zoom and are recorded so you can watch a session to catch up.

The first two sessions will provide you with a general introduction to nodegoat: in the first session you will learn how to configure your nodegoat environment, while the second session will be devoted to importing a dataset. In the third session you will learn how to run ingestion processes in order to enrich any dataset by using external data sources. The fourth session will be used to query other data sources to ingest additional data.

The setup of the workshop series allows new users to start from scratch, while existing users can join at a session later in the series. You can register for these workshops until 25-04-2021 via kaspar.gubler@hist.unibe.ch.

Session 1: Data Modelling (28-04-2021, 14:00-17:00 CEST)

After a brief introduction to nodegoat, we will implement a data model in your own nodegoat environment. This data model will focus on people and publications. Once the data model is up and running, we will manually enter a small amount of data to evaluate your data model.

If you do not have a nodegoat account yet, please request an account. If your institute has their own nodegoat installation, you can use your institutional nodegoat account.

Session 2: Importing Data (05-05-2021, 14:00-17:00 CEST)

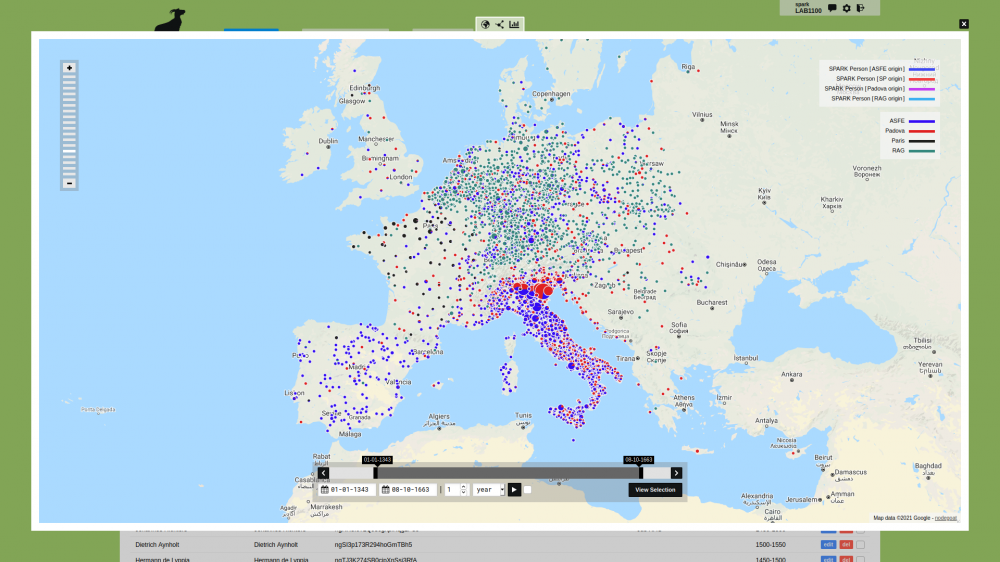

In this session we will import a CSV file that contains a subset of the medieval scholars that are part of the Repertorium Academicum Germanicum. We will also import spatial and temporal data to explore spatial and temporal filters and visualisations.

After these two first sessions you will have a fully configured nodegoat environment that contains an operational data model that has been populated with a well structured dataset. These elements are required in order to follow along in session 3 and session 4.

Session 3: Ingesting Biographical Data (12-05-2021, 14:00-17:00 CEST)

Now that you have a nodegoat environment configured and you have a dataset available, we can start enriching this dataset by means of Ingestion processes. We set up a number of Linked Data Resources and configure Ingestion processes that will allow you to ingest data related to the Objects in your dataset (e.g. query Wikidata based on GND-numbers and store the returned biographical data in your environment).

We will also look at some simple Conversion scripts that allow you to align the external data formats with the data in your own environment.

Should you already have a nodegoat project up and running in which you have manually entered data, you can join this session to learn how to enrich your data with data coming from external resources.

Session 4: Ingesting Related Data (26-05-2021, 14:00-17:00 CEST)

We will use this session to explore how we can ingest data from other data sources. These additional datasets can be related to the data in your environment, e.g. query a library to ingest all the publications of the people in your database. You can also opt to ingest unrelated datasets, e.g. query Wikidata to ingest all the castles in the Duchy of Brabant.

In this session we will also further explore the Conversion scripts in order to ingest the data in a consistent manner.

You can learn more about the project and the workshop here.

Comments

Add Comment