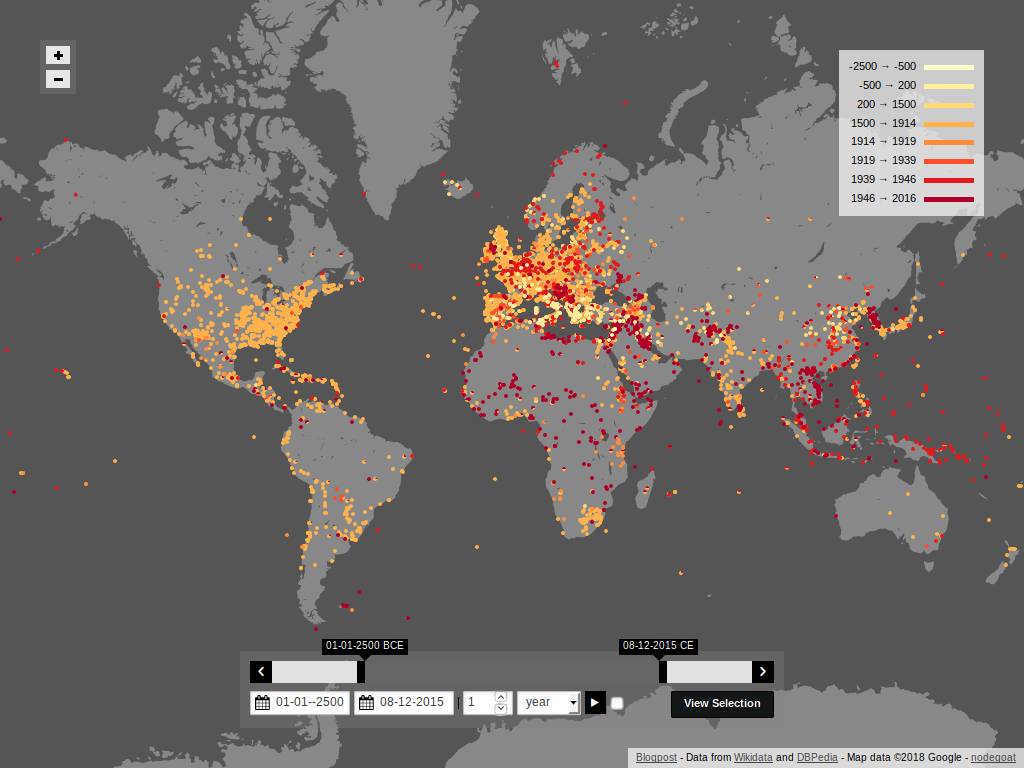

This week we gave a two-day workshop on data modeling and database development for historians. This workshop was part of the course Databases for young historians. This course was sponsored by the Huizinga Instituut, Posthumus Instituut, Huygens-ING and the Amsterdam Centre for Cultural Heritage and Identity (ACHI, UvA) and was hosted by Huygens-ING.

We had a great time working with a group of historians who were eager to learn how to conceptualise data models and how to set up databases. We discussed a couple of common issues that come up when historians start to think in terms of 'data':

- How to determine the scope of your research?

- How to deal with unknown/uncertain primary source material?

- How to use/import 'structured' data?

- How to reference entries in a dataset and how to deal with conflicting sources?

- How to deal with unique/specific objects in a table/type?

These points were taken by the horns (pun intended) when every participant went on to conceptualise their data model. To get a feel for classical database software (tables, primary keys, foreign keys, forms, etc..), they set up a database in LibreOffice Base. Finally, each participant created their own data model in nodegoat and presented their model and first bits of data.[....]