What is a Relational Database?

CORE Admin

At a certain moment in your research process, you might decide that you need to order your material in a structured format. A reason could be that there are too many different people in your body of research and it's becoming hard to keep track of them, let alone their different attributes. Another reason could be that you have repetitive sources, like letters or books, that you want to store and include in your analysis.

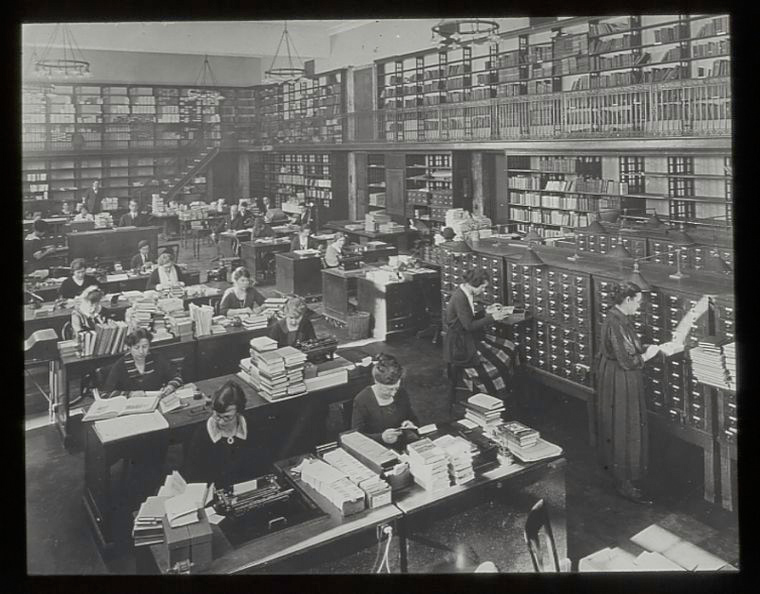

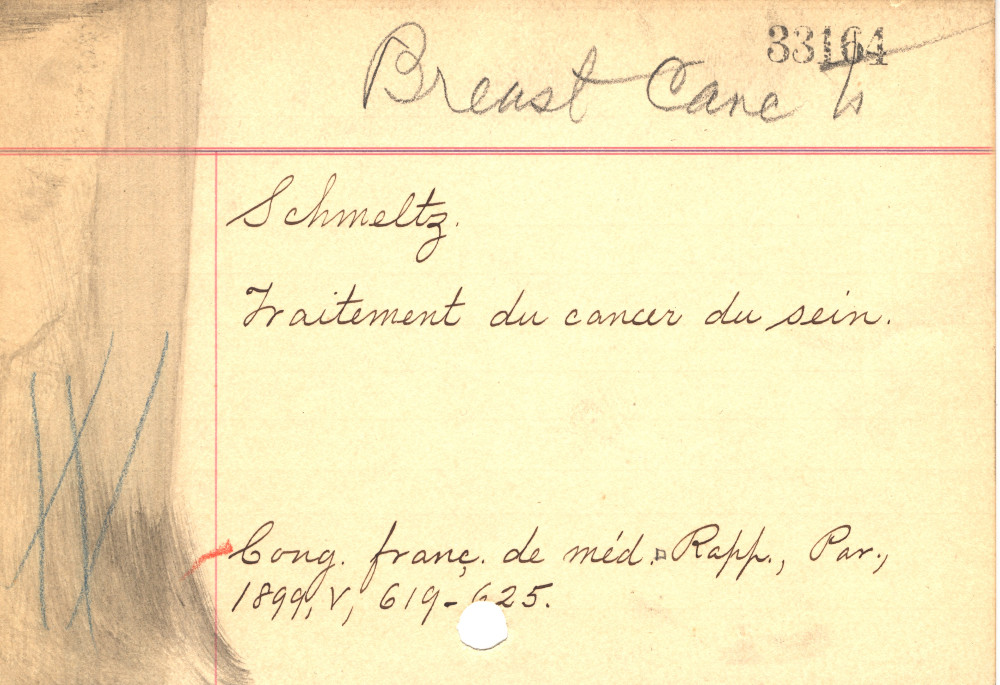

In the old days, you would get yourself a card catalogue and start reworking your notes onto these little handy cards.

Nowadays, the most common step researchers take in this situation is to start using a spreadsheet to store their information. Information that has been noted on notepads or typed out in word processing documents is transferred to an application like Microsoft Excel, LibreOffice/OpenOffice Calc, or Google Sheets.

This move allows you to easily keep track of all your information and gives you the ability to perform simple filters, counts, or use this data as input for visualisation applications.

However, as soon as some complexity becomes part of your data (e.g. relationships, uncertainty, and exceptions), this approach starts to be problematic. In this blog post, we aim to demonstrate some of these challenges and give insights in how to overcome them. By doing so, we hope to familiarise researchers who have no experience in data modelling with the concept of a relational database.

Let's take the example of a small research project on correspondents networks. This can be a body of letters of one person who sent/received letters to/from many other people, or a body of letters with multiple different senders and recipients.

To do this most straightforward and to the point, you would create a spreadsheet with the following columns: date, sender, recipient.

This can be expanded to also include information on the kind of letter, source references, and the city where the letter was sent and received (scroll to the right to see all the columns).

This already generates quite an extensive body of information that can be used to count, filter, and analyse the letters. Once more data has been entered, you could answers questions like: with whom was Ľudovít Štúr most in contact between 1841 and 1845. You can also use this spreadsheet to visualise a network and analyse central or peripheral positions of correspondents.

Yet when the amount of data in the spreadsheet grows, this approach becomes problematic. This is because we rely on the name of a person for the identification of this person. Even though this is oftentimes the most logical thing to do, it is difficult to avoid any ambiguities with this approach. Apart from typos, like 'Lachmann Karl' vs 'Lachmann Karl', you also might need to deal with multiple spellings of one name, like 'Jacob Grimm' vs 'Jakob Grimm'. To overcome this, it is better to work with one unique identifier per person. This identifier can be numerical value, like 0351, or a short string that makes sense to you, like 'JGr', or you could use an external identifier, like a VIAF number: '31997136'. This challenge is not unique to people and also applies to the names of cities (e.g. 'Pressburg' vs 'Bratislava', 'Lemberg' vs 'Lemberg').

Another problem arises when we want to include additional attributes for people and cities in this dataset. It might be relevant to filter the letters on the gender of the sender/recipient or on the capacity of the sender/recipient. This would allow you to perform a filter like: list all the letters sent to Ľudovít Štúr by philologists. You would also need to include the latitude and longitude values of the sending and receiving locations if you also would like to create a map of the network. The spreadsheet now looks like this (scroll to the right to see all the columns):

Even though it is now possible to perform the filter mentioned above and you are able to create a map of this network, you have to repeat a lot of information. Not only does this lead to a lot of redundant information, you are also doing unnecessary work.

To overcome these challenges, we introduce the concept of relationality. This means that we step away from the process of storing all information in one overview (i.e. one massive spreadsheet) and start storing information in multiple locations. We then make relationships between these locations in order to allow these different locations to communicate with each other. This can be done with ease in any spreadsheet application by using multiple sheets.

In our example, we rename 'Sheet 1' to 'Letters' and add two new sheets: 'People' and 'Cities'. We add a column called 'ID' to each sheet where we can store unique identifiers for each letter, person, and city. This allows us now to transfer all the personal information to the 'People' sheet and all the information on cities to the 'Cities' sheet. Click on the name of each sheet to switch between the sheets.

We now have three kinds of information stored at different locations communicating with each other by sharing their unique identifiers.

The added benefit of this is that we now can add additional information to People and Cities, like alternative spellings, historical names, and external identifiers. You could even decide to completely rely on these external identifiers for the relationships within your dataset (see this blog post for more information on the benefits of using external identifiers in your dataset). To get some visual feedback on the identifiers, you can add additional columns that display the names of the sender and receiver in the Letters sheet based on values entered in the People sheet, by using a VLOOKUP function.

Of course a couple of spreadsheets are not the same as a database, but that's not the point here. The point is that if you are able to come up with a setup (a 'data model') in which you store different kinds of information, you understand the concept that lies behind every relational database. You can now accommodate the fact that letters and persons require different attributes with respect to their sources and your purpose. You can now also adequately describe the relationships between these different classes of information. Once you comfortably move between your sheets and relationships, you can translate this setup to an abstract data model, and use this as a basis when you start working with a database.

To get going, you could start by translating the three sheets shown above into a conceptual data model. Once you've done that, you can create a logical data model. And finally you could use a database application to translate this model to an interface.

Once you have this data and all the relationships available in a database, you can create a query like: find all the philologists writing letters from Berlin between 1836 and 1838, simply by following the data model.

- A conceptual data model on epistolary relations

- A logical data model on epistolary relations

- Login to nodegoat.net with the username 'demo' and the password 'demo' and go to Model to see how this data model has been developed as an interface.

Now that you are able to conceptualise a data model that can host your data, you are ready for a next step: how to deal with all these uncertain dates, ambiguous statements, and conflicting source material. These challenges are covered in the next blog post "Formulating Ambiguity in a Database".

Comments

Add CommentI rarely read an article with beautiful infographic like that. Thanks for sharing.