URL: https://nodegoat.uva.nl/

Projects: https://ernie.uva.nl, https://pasdarmes.org/database, https://merchantsmarks.org/database

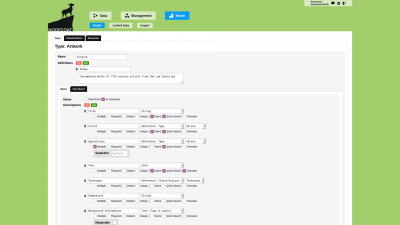

Use nodegoat to build your own data model. All of nodegoat's functionalities are tailored to your research questions.

Data Modelling

Use nodegoat to create new datasets collaboratively or alone. Explore data by means of spatial and temporal visualisations. The built-in network analysis tools reveal patterns and central nodes.

Data Creation & ExplorationUse nodegoat to publish your data in interactive visualisations, as an API, or export data publications.

Data PublicationURL: https://nodegoat.uva.nl/

Projects: https://ernie.uva.nl, https://pasdarmes.org/database, https://merchantsmarks.org/database

URL: https://nodegoat.unibe.ch/

More info: https://dhbern.github.io/content/services/nodegoat-go/, https://www.dh.unibe.ch/dienstleistungen/nodegoat_go/index_ger.html

Projects: https://rag-online.org/datenbank/abfrage, https://www.readingthebeach.unibe.ch/, https://nodegoat.unibe.ch/viewer.p/32, etc.

URL: https://nodegoat.wesleyan.edu/

More info: https://www.wesleyan.edu/digitalscholarship/Tools.html

Projects: http://travelerslab.research.wesleyan.edu/

URL: https://nodegoat.dasch.swiss/

More info: https://rise.unibas.ch/en/news/details/nodegoat-an-der-universitaet-basel/

URL: https://nodegoat.hiu.cas.cz/

More info: https://www.hiu.cas.cz/nodegoat-go

URL: https://nodegoat.ugent.be/

More info: https://www.ghentcdh.ugent.be/services/collaborative-databases

Projects: https://www.tic.ugent.be/, https://www.gcdh.ugent.be/projects/pyramids-and-progress-belgian-expansionism-and-making-egyptology-1830-1952

URL: https://nodegoat.dhlab.lu/

Projects: https://warlux.uni.lu/, etc.

Every nodegoat API is now described by an OpenAPI Description (OAD). A .yaml file is automatically generated based on the current configuration of any project specific API. This machine-readable document describes all available endpoints for the selected project.

Example of an output, available via https://demo.nodegoat.io/project/1.openapi:

---

openapi: 3.1.1

info:

title: Demo - Correspondence Networks

version: "20251112.154849"

description: API description for Project "Correspondence Networks"

externalDocs:

url: https://documentation.nodegoat.net/API

description: Full documentation for Data API.

servers:

- url: https://demo.nodegoat.io/project/1/

description: ""

paths:

/{id}:

get:

summary: Get Objects based on an identifier.

parameters:

- $ref: '#/components/parameters/data.identifier.path'

responses:

200:

content:

application/json:

schema:

$ref: '#/components/schemas/data.type_objects.json'

application/ld+json:

schema:

$ref: '#/components/schemas/data.type_objects.jsonld'

description: List of one or more Objects that match the given identifier.

/:

get:

summary: Get Objects based on an identifier.

parameters:

- $ref: '#/components/parameters/data.identifier.query'

responses:

200:

content:

application/json:

schema:

$ref: '#/components/schemas/data.type_objects.json'

application/ld+json:

schema:

$ref: '#/components/schemas/data.type_objects.jsonld'

description: List of one or more Objects that match the given identifier.

/data/type/{type_id}/object/:

get:

summary: Get Objects from a Type.

parameters:

- $ref: '#/components/parameters/model.type_id'

- $ref: '#/components/parameters/other.search'

- $ref: '#/components/parameters/other.limit'

- $ref: '#/components/parameters/other.offset'

- $ref: '#/components/parameters/other.filter'

- $ref: '#/components/parameters/other.scope'

- $ref: '#/components/parameters/other.condition'

responses:

200:

content:

application/json:

schema:

$ref: '#/components/schemas/data.type_objects.json'

application/ld+json:

schema:

$ref: '#/components/schemas/data.type_objects.jsonld'

description: List of Objects for the given Type ID and the query parameters.

[...]

Now that this description is in place, the output can be validated by means of the Swagger validator, via https://validator.swagger.io/. Enter 'https://demo.nodegoat.io/project/1.openapi' in the input field to explore the API. nodegoat user without a sub-domain for their research environment can use this modified validator that applies the authentication before the initial request.[....]

In the next couple of weeks we will be running these events at various locations throughout Europe, plus one virtual event. Follow the links below to learn more about each event and the register:

Find the latest information about this here: https://nodegoat.net/workshop

The Institute of History of the Czech Academy of Sciences organises a nodegoat Day on Wednesday 12 November 2025 in Prague. The event will include a talk by Kaspar Gubler, presentations of nodegoat projects, as well as a demonstration of new nodegoat features.

You can learn more about the event and Call for Participation here. [....]

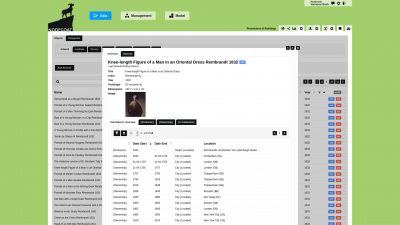

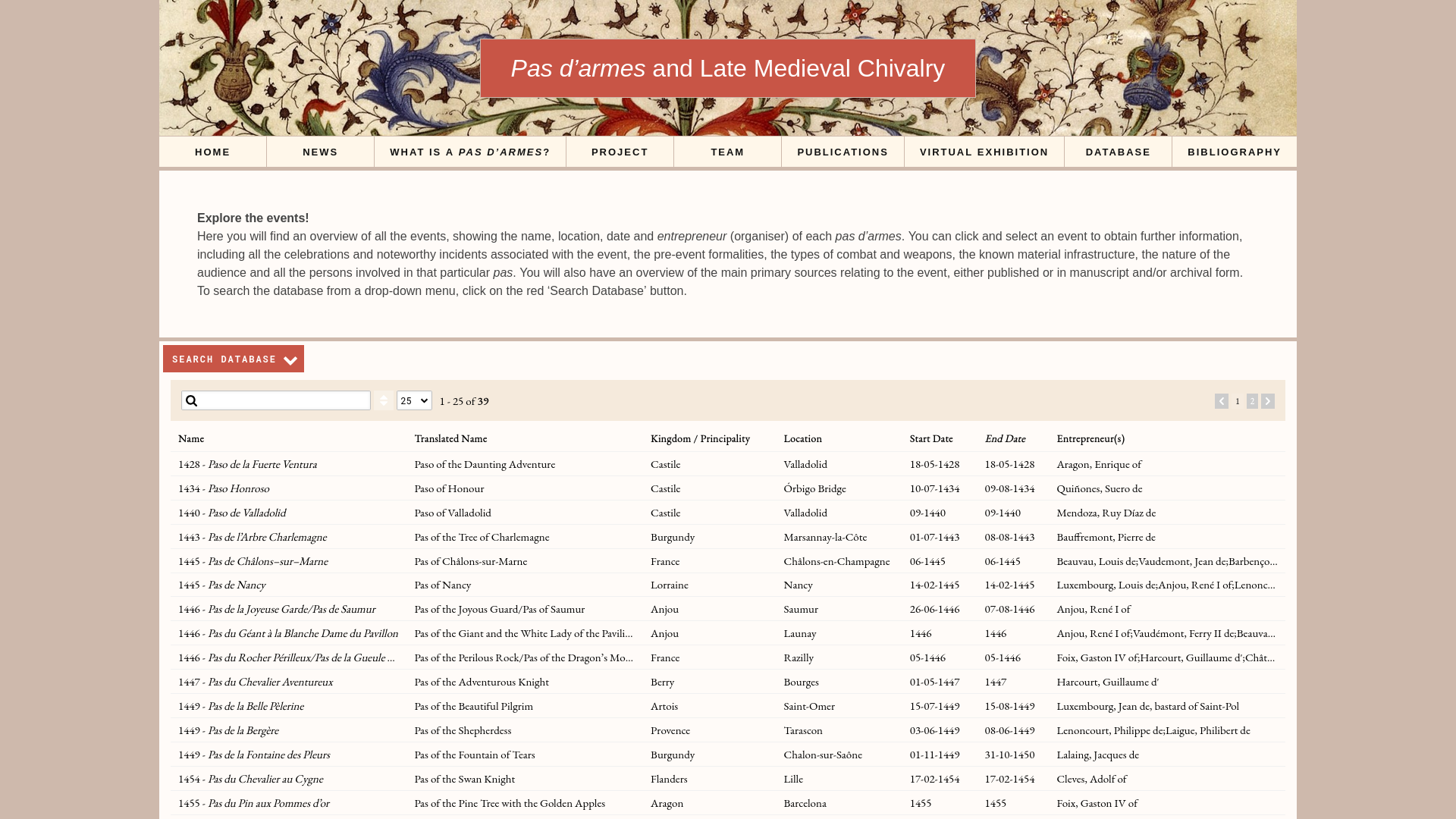

The project 'The Joust as Performance: Pas d’armes and Late Medieval Chivalry' has released a data publication that contains details of all pas d’armes held between c.1420 and c.1520. The dataset includes the following information: exact dates and locations, names of entrepreneurs and challengers, composition of teams, type of combat, theatrical scenario, ephemeral architecture, guests and spectators. The data can be downloaded via this link and had been published in both JSON and CSV formats. The database was created by Mario Damen, Jacob Deacon, and other team members in nodegoat. The publication was generated by means of the nodegoat Data Publication Module.

Scholars who want to explore this data by means of an interface may consult the database that has been published on the project website. These results have also been published in an open-access book.

The project also created a virtual exhibition in order to share the results of the project with a wider audience.[....]

Join us next week in the Aula Magna of the Biblioteca Universitaria di Bologna for a public lecture on nodegoat "Working with nodegoat: an Introduction to Historical Data Analysis and Visualisation". The public lecture takes place on Thursday March 6 between 10:00 and 12:00.

The Österreichisches Archäologisches Institut of the Österreichische Akademie der Wissenschaften organises a nodegoat workshop on Thursday 20 March between 09.30 and 17.00 in Vienna. You can learn more about the event and registration process here.

Thanks to Nirvana Silnovic for organising this event.

Two opportunities to learn more about using nodegoat for your research projects!

On January 22 we will organise a nodegoat workshop together with the GRACPE project (Grup de Recerca sobre l'Arqueologia de la Complexitat i els Processos d'Evolució social) at the Faculty of Geography and History, University of Barcelona. The venue will be Sala Jane Adams (Carrer de Montalegre, 6) and the workshop will take place on January 22 between 9AM and noon. The entry is free and no registration is needed. For further information please contact: sd.prehistoria.arqueologia@ub.edu

Thanks to Maria Del Rocio Da Riva Muñoz for organising this event.

On January 23 we will organise a nodegoat workshop together with the project 'Narremas y Mitemas: Unidades de Elaboración Épica e Historiográfica' at the Facultad de Filosofía y Letras, Universidad de Zaragoza. The venue will be Sala de Grados (Pedro Cerbuna, 12) and the workshop will take place on January 23 between 4PM and 7PM. More info and registration: https://eventos.unizar.es/127926/detail/nodegoat-workshop-an-introduction-to-a-web-based-research-environment-for-the-humanities.html

Thanks to Celia Delgado Mastral and Marta Añorbe Mateos for organising this event.

Publish your project with the new data publication module. nodegoat users can now select any project to generate a data publication that is web-accessible and downloadable as a ZIP-file. By generating a new publication a Project's data model and all of its data are published and archived. The publication remains accessible also when new publications are generated at a later stage.

Publications are stand-alone self-containing archives which include both the HTML-interface to the data model as well as all of its data in both JSON and CSV.

This new publish feature extends the existing data extraction and data publication options, i.e.: the export functionality, the API, and the public user interface.[....]

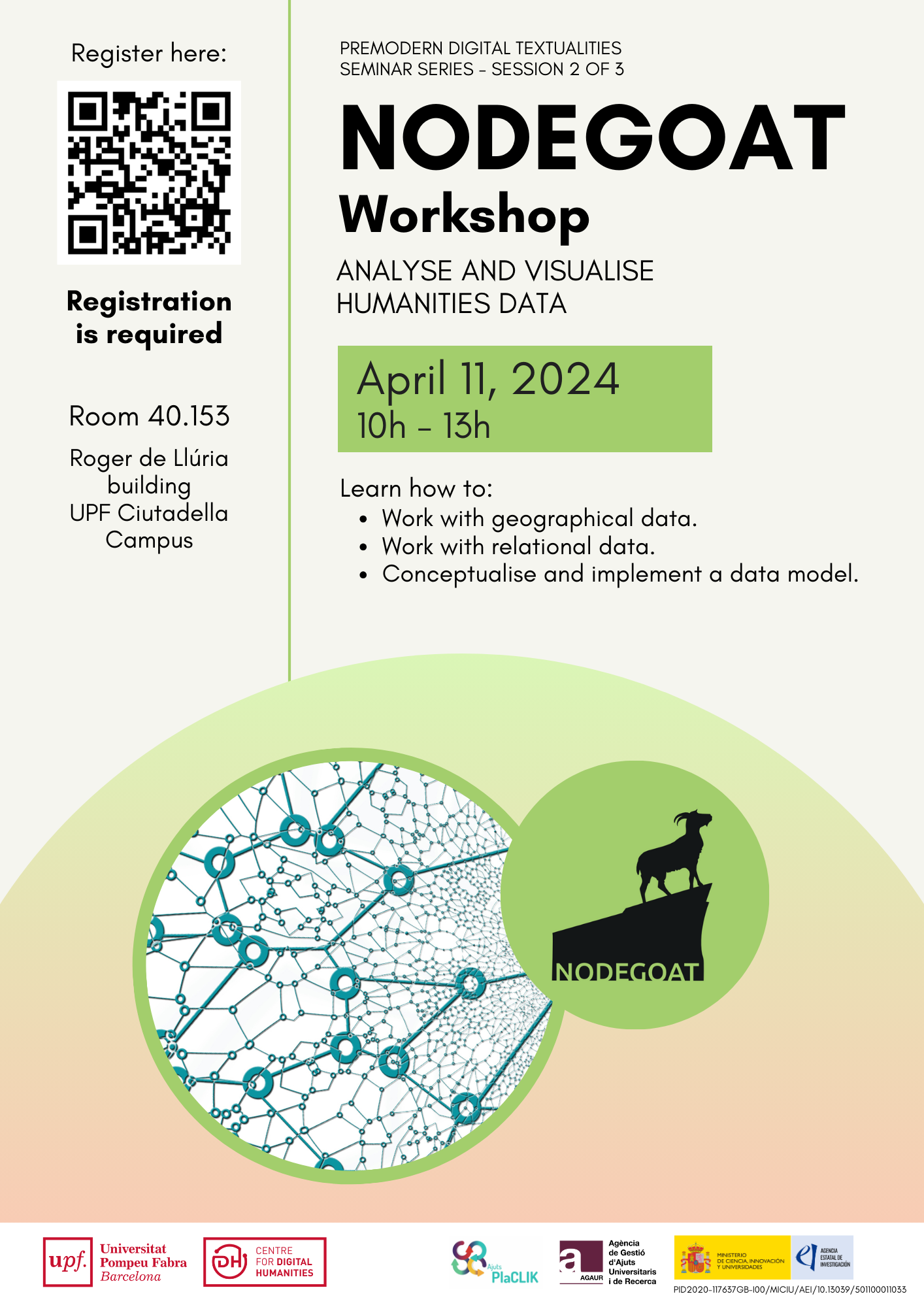

Join us on April 11 between 10:00 and 13:00 for a nodegoat workshop at the Centre for Digital Humanities of the Universitat Pompeu Fabra (UPF-DH) in Barcelona. The workshop is organised as part of the "Premodern Digital Textualities" series. This is an in-person event. You can find more about the workshop via this link and you can register here.

Thanks to Marija Blašković for organising this event.[....]

Nous sommes ravis d'annoncer que la ressource en ligne "The Programming Historian en français" a publié une leçon sur la conceptualisation d'une base de données à l'aide de nodegoat : Des sources aux données, concevoir une base de données en sciences humaines et sociales avec nodegoat.

La leçon a été rédigée par Agustín Cosovschi, édité par Sofia Papastamkou et évalué par Octave Julien et Solenn Huitric.[....]

New blog post on how to connect nodegoat to LLMs: ‘Data and Dialogue: Retrieval-Augmented Generation in nodegoat’

Školení Nodegoat v českém jazyce, organizované Historickým ústavem AV ČR, se uskuteční 4. února 2026 v konferenčním sálu ÚTAM (suterén budovy, Prosecká 809/76, Praha 9).

nodegoat 8.5 is here: https://nodegoat.net/release ☃️🐐

Every #nodegoat API is now described by an OpenAPI Description (OAD).

Many thanks to the Institute of History of the Czech Academy of Sciences for organising yesterday’s nodegoat Day in Prague!

In the context of GrECI Twinning research project (www.greci-twinning.org), we are organising a one-day training workshop on digital applications for humanities-related research, titled Exploring Spatial and Network Data in nodegoat. The workshop will be held on the 4th of September at the University of Cyprus, in the Stelios Ioannou Learning Resource Center.

The Institute of History of the Czech Academy of Sciences organises a nodegoat Day on Wednesday 12 November 2025 in Prague. The event will include a talk by Kaspar Gubler, presentations of nodegoat projects, as well as a demonstration of new nodegoat features.

New use case by Pierluigi Terenzi (University of Florence) on the LASI project that analyses workers and decisions related to the Opera di Santa Maria del Fiore in the second half of the 14th century.

Blog post about a data publication: "Pas d'armes" and Late Medieval Chivalry

|

Geographic visualisation of biographies of scholars. Tobias Winnerling (Heinrich-Heine-Universität Düsseldorf), project: "Wer Wissen schafft. Gelehrter Nachruhm und Vergessenheit 1700 – 2015". |

Social Network Graph of the network around Dutch engineer Cornelis Meijer. Project: "Mapping Notes and Nodes in Networks". |